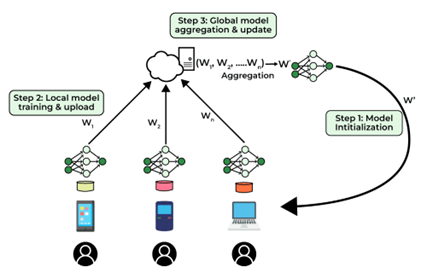

What is Federated Learning:

Federated learning (FL) is a decentralized machine learning approach where multiple devices collaboratively train a model without sharing their raw data. Each device uses its data to update the model and then sends these updates to a central server. The server combines these updates to improve the overall model. This method enhances data privacy and security, as sensitive information remains on the local devices. Federated learning is beneficial when data is distributed across many users, such as in mobile applications, healthcare, and IoT devices, enabling advanced analytics while respecting user privacy and regulatory constraints.

Federated Learning Applications:

1. Internet of Things (IoT):

Federated learning is applied in the IoT ecosystem, where numerous smart devices collect and process data in a collaborative effort. For example, smart home devices like security cameras and voice assistants can work together to improve their skills in recognizing voices, predicting activities, and managing energy. They do this by sharing only the updates to their learning models, not the data they collect. This collaborative approach ensures that user data collected by individual devices remains local, thereby protecting privacy while enabling more intelligent and more efficient IoT systems.

2. Healthcare:

In healthcare, federated learning allows hospitals to work together to train models that can predict disease outbreaks or patient readmissions without sharing their patients' private data. Each hospital uses its data to improve the model and then shares only the improvements, not the actual data. This way, the model benefits from a wide variety of data from different hospitals, making it more accurate. For example, a federated learning model trained across several hospitals can better predict patient outcomes for rare diseases due to the more extensive and varied training dataset. This approach helps maintain patient confidentiality and comply with HIPAA regulations (Health Insurance Portability and Accountability Act).

3. Mobile Application:

How to implement secure federated learning?

Data encryption techniques in federated learning:

1. Homomorphic Encryption:

2. Secure Multi-Party Computation (SMPC):

3. Differential Privacy:

4. Federated Averaging with Encryption:

5. Blockchain for Secure Aggregation:

Federated Learning vs. centralized machine learning for privacy

I. Data Distribution:

In classical machine learning, data is assumed to be independently and identically distributed across participants. In contrast, federated learning assumes non-identically distributed data since users have different data types. Data is evenly distributed among participants, which is impractical in real scenarios where participant numbers vary. Therefore, federated learning divides the data into shards, ensuring each participant receives equal information and accommodating the variability in the number of participants and their data types.

II. Continual Learning:

In classical machine learning, a central model is trained using all available data in a centralized setting. However, when quick responses are needed, communication delays between user devices and the central server can hinder user experience. While federated learning can run on user devices, continuous learning becomes challenging as models typically require access to the complete dataset, which isn't available locally. This discrepancy between the need for fast responses and the difficulty of continuous learning on user devices poses a significant challenge for federated learning implementation.

III. Data Privacy:

Federated learning addresses privacy risks by allowing local model training on user devices, eliminating the need to share data with a central server. Unlike classical machine learning, where training occurs on a single server, federated learning enables cooperative model training on decentralized data. This approach ensures continuous learning without exposing sensitive data to the cloud server, enhancing privacy.

IV. Aggregation of data sets:

Classical machine learning centralizes user data, risking privacy violations and data breaches. Federated learning upgrades models continuously, incorporating client input without aggregating data, preserving privacy and security. In the future, AI and ML, particularly in customer service, promise scalable systems, on-the-go model creation, and precise, timely results, revolutionizing business applications.

Our Office

GREER

South Carolina, 29650,

United States

South Carolina, 29650,

United States