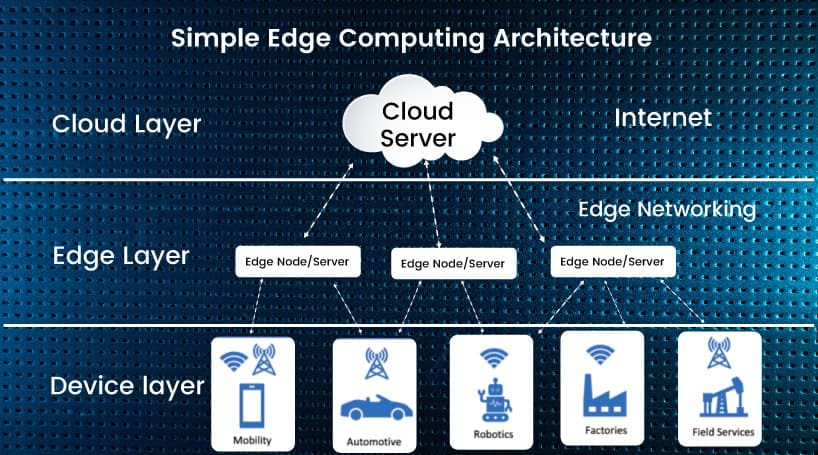

Latency reduction is one of the main advantages of Edge computing. It minimizes latency by processing data locally, unlike traditional cloud-centric approaches that require transmitting data back and forth between devices and remote servers. Edge computing enables real-time responses, which is crucial in applications where split-second decisions are imperative.

Edge computing reduces strain on network bandwidth by processing data locally, thereby reducing the volume of data that needs to be transmitted over the network. This not only optimizes network performance but also contributes to significant bandwidth savings, particularly in environments with constrained network resources.

Cost Savings

The decentralized nature of Edge computing translates into cost savings, as edge devices are empowered to handle tasks autonomously without heavy reliance on cloud resources. By distributing computational load across a network of edge devices, organizations can mitigate infrastructure costs while ensuring optimal performance and scalability.

The proliferation of mobile devices, Internet of Things (IoT) sensors, and AI applications has precipitated an unprecedented surge in data generation at the network edge. Traditional cloud-centric approaches, while proficient in handling conventional workloads, are ill-equipped to cope with the sheer volume and velocity of data emanating from disparate sources. Edge computing emerges as a panacea to this predicament, bridging the chasm between data generation and processing.

In tandem with the rise of Edge computing, Edge Intelligence, also known as Edge AI, has emerged as a transformative paradigm that amalgamates artificial intelligence with decentralized computing. Current methods in Artificial Intelligence (AI) and Machine Learning (ML) typically assume that computations are performed on powerful computational infrastructures, such as data centers equipped with ample computing and data storage resources. A new rapidly evolving domain that combines edge computing with AI/ML is often referred to as Edge AI. The Edge AI approach processes data using AI and machine learning algorithms locally on devices situated close to the data sources. This convergence facilitates the execution of machine learning algorithms directly on edge devices, where data is generated and processed on edge leading to benefits including enhanced privacy, security, and scalability.

Edge Intelligence preserves user privacy by ensuring that sensitive data remains localized on the device, with only model results being shared. This obviates concerns about data sovereignty and privacy breaches, engendering trust among users and bolstering the security posture of AI systems.

By democratizing access to AI capabilities, Edge Intelligence empowers organizations to deploy machine learning algorithms ubiquitously across a diverse array of edge devices. This democratization fosters innovation, driving the proliferation of AI-powered applications and services across various domains.

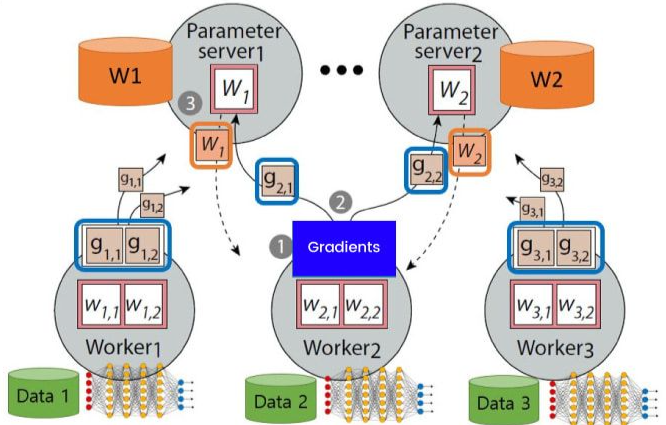

Federated learning emerges as a groundbreaking technique that revolutionizes the landscape of machine learning by enabling collaborative model training across decentralized edge devices or servers without the need for raw data exchange. This innovative approach circumvents the inherent challenges associated with centralized data aggregation while preserving user privacy and data sovereignty.

Federated learning begins with the development of a base machine learning model in the cloud, leveraging publicly available data to initialize model parameters and architectures. Participating user devices volunteer to train the model locally, leveraging their respective datasets to refine model parameters through iterative learning processes. Notably, user data remains encrypted and confined to the device, ensuring stringent adherence to privacy regulations and user consent. Encrypted model updates, reflecting the knowledge gleaned from local data sources, are transmitted to the cloud for aggregation. Through sophisticated aggregation techniques, such as federated averaging, the cloud synthesizes these disparate updates to refine the base model iteratively, without compromising user privacy.

Federated learning operates on the premise of preserving user privacy, with stringent safeguards in place to obviate the risk of data exposure or privacy infringements. By ensuring that raw data remains localized on user devices, federated learning fosters trust among users, thus enhancing the security posture of AI systems.

Edge computing and the Internet of Things (IoT) are intricately intertwined, given their shared focus on data generation and processing. Edge computing represents a key enabler of IoT, providing a distributed computing infrastructure that can handle the massive volume of data generated by IoT devices. IoT devices, such as sensors and wearables, generate a vast amount of data at the network edge, which can overwhelm traditional centralized servers. By leveraging Edge computing, organizations can process this data locally, thus reducing latency and enhancing scalability. Moreover, Edge computing facilitates real-time analytics, enabling organizations to glean insights from IoT data in near-real-time, which is crucial in applications such as predictive maintenance and anomaly detection.

Edge computing and Decentralized Machine Learning hold significant implications for the future of AI, driving innovation and transforming the technological landscape. As organizations seek to harness the power of AI, Edge computing and Decentralized Machine Learning will undoubtedly play a pivotal role in shaping the future of this revolutionary technology. One key area where Edge computing and AI converge is in the realm of autonomous systems, such as self-driving cars and drones. These systems rely on real-time data processing and analysis to make split-second decisions, which is facilitated by Edge computing's ability to minimize latency and enhance bandwidth efficiency. Moreover, Edge computing enables these autonomous systems to operate in environments with limited network connectivity, such as remote areas or disaster zones, by enabling local data processing and analysis. Another key application of Edge computing and AI is in the realm of healthcare. Edge computing enables the deployment of AI-powered healthcare applications on wearable devices, such as smartwatches and fitness trackers, thus enabling remote patient monitoring and disease management. Moreover, Edge computing facilitates real-time data processing and analysis, which is crucial in applications such as telemedicine and emergency response.