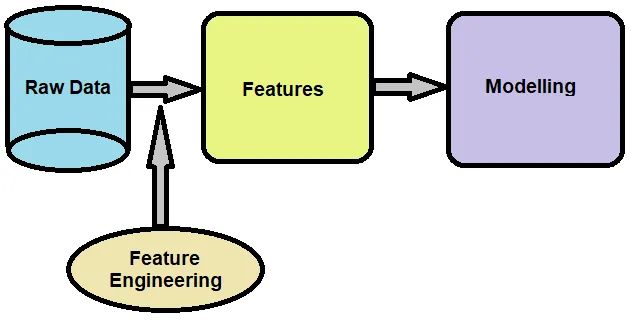

Feature engineering:

Feature engineering in data science involves turning raw data into helpful information for machine learning models. It involves selecting, modifying, and creating new features to enhance the model's performance. Essential techniques include normalization, which scales numerical data to a standard range; encoding categorical variables, which converts non-numeric data into numerical form; and creating new features by combining or transforming existing ones. Feature engineering also deals with filling in missing data and reducing the number of features to avoid overfitting. By shaping the data to highlight essential patterns, feature engineering makes machine learning models more accurate and efficient, leading to better predictions and insights.

Why is feature engineering important in machine learning?

- Improves Model Performance: Good features help the model find patterns and relationships in the data, leading to more accurate predictions. This is especially helpful for complex datasets where vital information isn’t obvious.

- Enhances Data Quality: Feature engineering fixes missing values, irrelevant features, and inconsistencies. Cleaning up the data this way makes it more reliable for model training.

- Facilitates Better Understanding: Creating new features can uncover trends and insights that aren’t visible in the raw data. This helps in better understanding the problem and making informed decisions.

- Reduces Overfitting: By using techniques like reducing the number of features and selecting only the important ones, feature engineering helps prevent overfitting. The model will work well on the training and new, unseen data.

- Speeds Up Training: Efficient feature engineering simplifies the data, making the training process quicker and less resource-intensive. This is crucial for working with large datasets and complex models.

Techniques in Feature Engineering

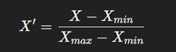

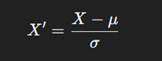

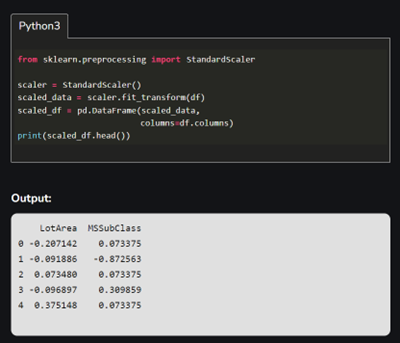

Normalization:

Encoding Categorical Variables

Purpose: Encoding categorical variables converts non-numeric data into a numerical format that machine learning algorithms can process. This step is essential because most machine learning algorithms require numerical input to perform mathematical computations.

Methods:

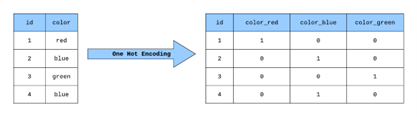

• One-Hot Encoding: Used for categorical features like "Gender" or "Marital Status" in customer segmentation tasks. This method converts each category in a categorical variable into a binary vector, where a unique column represents each category. One-hot encoding is proper when there is no ordinal relationship between categories. For example, One Hot Encode, a color feature that consists of three categories (red, green, and blue), would be transformed into three binary features:

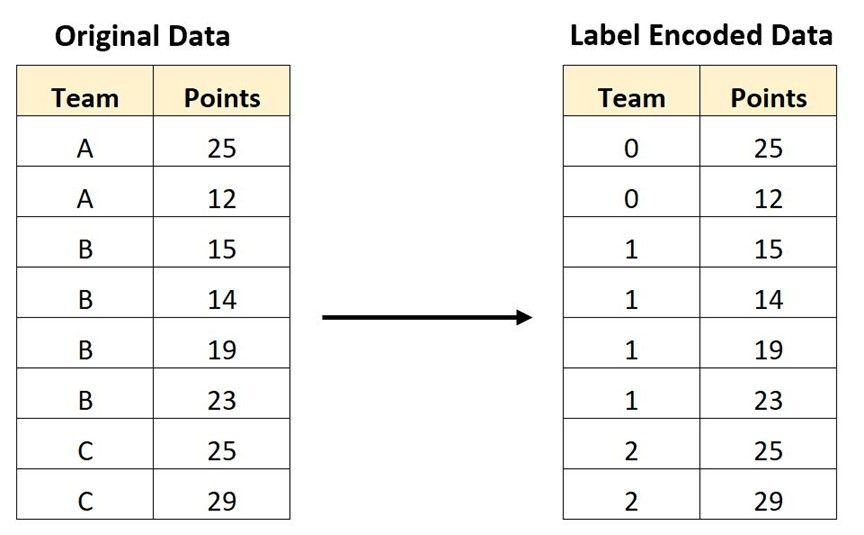

- Label Encoding: This method is applied to ordered categorical features like "Education Level" (High School, Bachelor's, Master's, PhD). It gives a unique integer to each category. For instance, categories A, B, and C might be encoded as 0, 1, and 2, respectively. This approach is simple and space-efficient but can introduce an unintended ordinal relationship, which might not be appropriate for all categorical data types.

- Target Encoding: Utilized in scenarios like predicting customer churn, where categorical variables like "Country" or "Product Type" are replaced by the average moving rate of each category. Also known as mean encoding, this method replaces each category with the mean of the target variable for that category. For example, if we predict house prices and one of the features is the neighborhood, target encoding would replace each neighborhood category with the average house price. This can capture more information than one-hot or label encoding but may introduce leakage if not done carefully.

Creating New Features

How do I perform feature engineering?

Case Studies