What is Generative AI?

(1) Discriminative and

(2) Generative models.

Discriminative Models

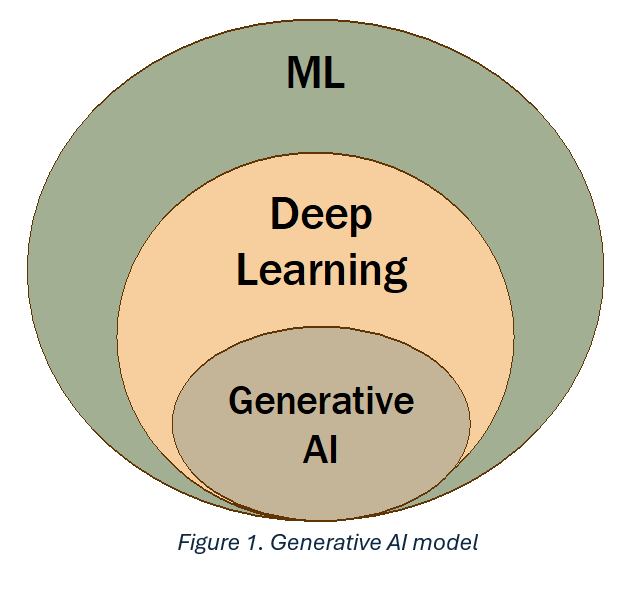

Discriminative models focus on prediction or classification tasks, relying on labeled datasets. The core objective is to establish the relationship between input features and corresponding labels through deep neural networks. Discriminative models thrive on structured datasets, excelling in tasks where classifying or predicting outcomes is paramount. Discriminative models focus on predicting or classifying outcomes based on labeled data, typically represented as the relationship between input features (X) and labels (Y). This is the essence of building model F, where Y is conditioned on X.

Generative Models

In contrast, generative models take a different route. Generative models try to learn the underlying distribution of the data, enabling the model to generate new, realistic instances. Instead of being fixated on labeling existing data, they are geared towards creating new data points. Picture this: you have a cluster of data, and the goal is to learn the underlying distribution or probability function that enables the generation of new data points. Generative models shine in their ability to generate data points similar to the ones they were trained on. The emphasis is not on predicting specific labels but on understanding and replicating the distribution of the data. Imagine predicting the next word in a sequence or generating an entirely new word based on neighboring words – that's the essence of generative models. Now, let's highlight a crucial distinction. Discriminative models, as we've discussed, predict Y given X, while generative models go a step further. They aim to learn the joint distribution of X and Y, allowing them to generate new instances by sampling from this distribution. During training, what emerges is a statistical model denoted as F(x, y). This model encapsulates the joint distribution of both input (X) and output (Y) within a unified framework. It's about creating, not predicting.

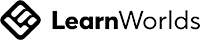

Generative models, often labeled as generative AI, can be considered an advanced version of deep learning models. In summary, generative AI is a subset of deep learning that focuses on creating, not just predicting. It's a powerful paradigm that opens avenues for creativity and innovation, allowing machines to generate content autonomously.

To emphasize the difference, consider an image recognition scenario. A discriminative model would classify an image into predefined categories like cat or dog. On the other hand, a generative model could take an image, or even generate a new one, exhibiting similarities to the input provided. It's not about classification; it's about creating new instances within the learned distribution.

Figure 2. Difference between discriminative and generative machine learning models.

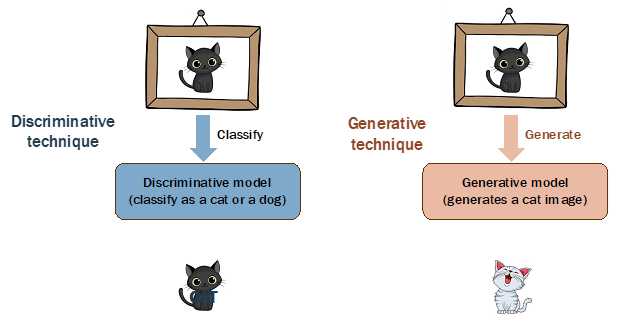

Discriminative models, as we discussed, output labels, either discrete for classification or continuous for regression. This output is essentially the model's prediction based on the input data and the learned relationship between X and Y. Now, consider the context of our generative focus in this course. Generative models operate in a distinct realm – a realm where you may not have labeled datasets or structured data. In this paradigm, we work with unstructured, unlabeled, or semi-labeled datasets, embracing the diversity of data at our disposal.

To comprehend the essence of generative AI, let's revisit the concept of mapping unknown functions from input (X) to output (Y). In the context of machine learning, our goal is to fit a highly nonlinear function to the data points we have. This function is not explicitly defined; it's embodied by the intricate architecture, hyperparameters, and activation functions within a deep neural network. To further differentiate between generative and discriminative models, consider the nature of the output of the function. When the model outputs a real number, it's a regression problem – a discriminative task. On the other hand, if the output is discrete, representing different classes or categories, it's a classification problem – another realm of discriminative models.

However, the defining characteristic of generative models lies in their output being more than just labels or numbers. When the output itself becomes a sample data point, like a piece of natural language, an image, or an audio segment, you've entered the realm of generative models. It's a model that learns the essence of the data and creates new instances within that learned distribution.

Different Classes of Generative AI Models

Within the realm of generative AI, a diverse array of models

exists. One of them is LLMs designed for

text-to-text interactions. These models thrive on input in the form of text and

produce corresponding text outputs. Trained to unravel the intricacies of

language, they excel in tasks such as language translation, predicting the next

word in a sequence, and an array of language-related challenges. Their

applications extend to generating new content, a capability we've witnessed in

the ChatGPT interface. Its proficiency in

understanding and manipulating text opens avenues for diverse applications,

from content generation to classification tasks.

Another important category of Gen AI models are

text-to-image models. Here, the input (X) is textual, and the output (Y) is an

image. These models, relatively new but immensely promising, undergo training

on expansive datasets. Each image within the dataset is accompanied by a

concise caption, serving as a textual description that encapsulates the essence

of the visual content. One prominent technique employed in achieving this feat

is diffusion. The capabilities extend beyond mere image

generation; these models empower also image editing. Picture this: you can

instruct the model to "draw a sunset with a serene view and beautiful

scenery." In response, it crafts a visual masterpiece that encapsulates

your textual prompt.

Another new frontier of generative AI embraces the burgeoning field of text-to-video models. The premise is captivating; these models aim to generate entire video sequences predicated on an initial text description. The text input, ranging from a single sentence to a comprehensive script, serves as the creative blueprint. The output manifests as a video that mirrors, or is inspired by, the input text. Imagine articulating a narrative, and witnessing it unfold dynamically in the form of a video – a convergence of storytelling and visual representation.

The Gen AI progression extends to text-to-3D models, marking a pivotal stride in design applications. Picture a scenario where users can generate three-dimensional objects based on textual descriptions. This application holds immense promise for augmented reality (AR) and virtual reality (VR) experiences. Describing a scene through text prompts the model to automatically populate the environment with components, offering a seamless fusion of textual input and three-dimensional design. Moreover, the text-to-3D model landscape finds application in product design. Users can define the functionality of a product through text, and the model, with its generative prowess, automatically synthesizes the corresponding shape. This synthesis, driven by textual descriptions, opens avenues for innovative design methodologies.

Consider the scenario where a user instructs the model to navigate a web UI, extract relevant information, and compile a report based on the gathered data. The text-to-task model comprehends the textual input, interprets the underlying task, and seamlessly interacts with the web UI to fetch the required information. This streamlined interaction between textual prompts and tangible actions exemplifies the transformative potential of text-to-task models.

Foundation Models

Expanding upon the general domain generative AI, the concept of the foundation model emerges as a pivotal force shaping the landscape of machine learning. A foundation model is not merely a singular entity; rather, it is a colossal deep learning model constructed upon a diverse array of data types. Imagine a model that seamlessly integrates text, images, videos, and various other data formats into a unified framework. This amalgamation of heterogeneous data serves as the bedrock for a truly versatile and comprehensive unsupervised learning model.

The magnitude of a foundation model is reflected in its scale, often boasting an impressive billions parameters. Through unsupervised learning, this model engages in representation learning, absorbing insights from the myriad data sources encompassed within its training set. The result is a foundational representation that encapsulates the essence of diverse data modalities. The brilliance of the foundation model lies in its adaptability. Once established, this model becomes a cornerstone—a basic yet powerful framework that can be fine-tuned and customized for a myriad of tasks. Whether it's language modeling, vision tasks, or other specialized applications, the foundation model serves as the fundamental building block. Once the foundation model is in place, it becomes a versatile tool that can be harnessed for various purposes. The key lies in tweaking and adapting this foundational representation to cater to specific tasks, yielding different outputs tailored to distinct use cases.

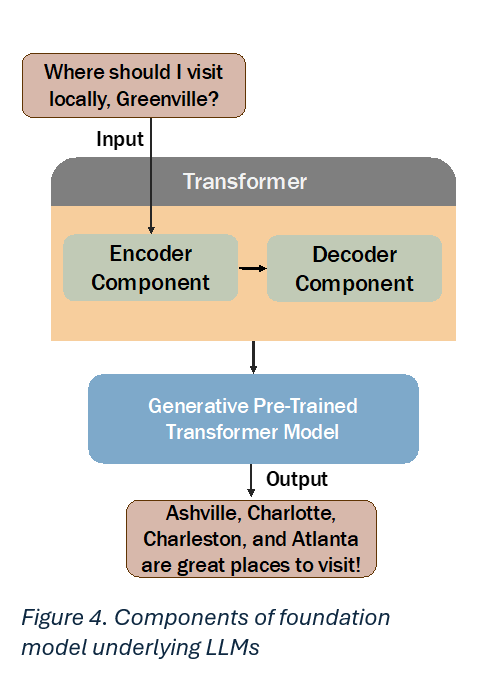

In the context of a foundation model, various types of data—ranging from images to text—are utilized. This diverse dataset is then processed using a transformer model, a key component comprising encoder and decoder. Think of it as a mechanism that learns to represent data points in an abstract, latent semantic space through encoding and decoding. This transformative process results in a lower-dimensional yet abstract space, signifying representation learning. The outcome is what we call a Generative Pre-trained Transformer model, abbreviated as GPT. When you hear terms like GPT-3.5, GPT-4, and so forth, they refer to different versions of this generative pre-trained transformer model. Once this model is learned, it becomes the foundation model, serving as the basis for subsequent customization.

What are the advantages of foundation models?

What are the disadvantages of foundation models?

Our Office

South Carolina, 29650,

United States